I just attached a postgres database that I know has several tables in the public schema. However, when I try to use the schema preview tool in datalore, I get a ‘schema empty’ notice. I’m guessing this is a permissions error. Has anyone figured out what grants need to be provided to a role for the full schema to show up in the editor?

@igro any advice for getting some attention on this question?

Hi @lonelytubu ,

You might lack USAGE privilege on the schemas (please refer to the official PostgreSQL documentation for more details):

Essentially this allows the grantee to “look up” objects within the schema. Without this permission, it is still possible to see the object names, e.g., by querying system catalogs.

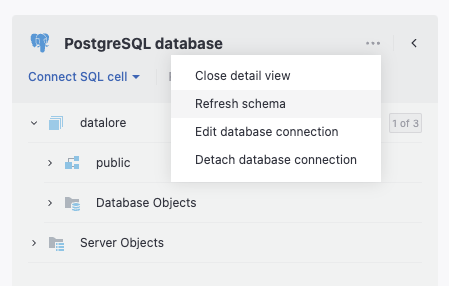

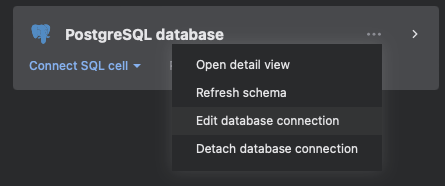

Apart from the New connection and Edit connection dialogs you can also try to refresh the schemas from the attached datasource tile:

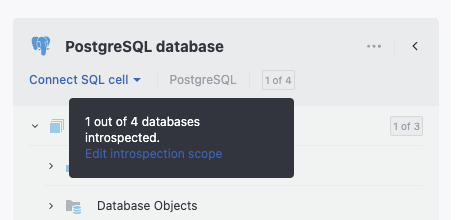

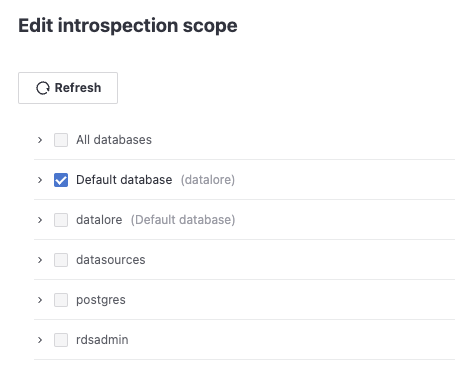

You can also edit the introspection scope from there as well:

@igro thanks for the feedback. I do have usage set for the profile on the public schema. When I use the refresh button I see an error saying that the introspection failed. Is there a way I can see more detailed logs?

@lonelytubu are there any errors printed when you click Edit database connection → “Test connection” button?

@igro the test connection button shows a success message like the one in your screenshot. I also tried the ‘Refresh’ button in the ‘Schemas’ tab’ with the network traffic inspector on. I see a POST request for load-namespaces get sent, but it never returns. I don’t see any other warning messages. Any ideas what happens in this request?

Just to be sure, I logged into my database with the user I provisioned for datalore and ran a couple queries to ensure that the user has access. They all came back okay. Let me know if you have ideas for other queries I can test to make sure the permissions are correct:

-

SELECT * FROM pg_catalog.pg_tables

-

SELECT table_schema||‘.’||table_name AS full_rel_name

FROM information_schema.tables

WHERE table_schema = ‘public’;

full_rel_name -

SELECT column_name

FROM information_schema.columns;

I also don’t see anything in the postgres logs

@lonelytubu I see, thank you. Do you use Datalore Enterprise (on premises)? Currently, the only way to get the detailed error message would be to check the server log in the database command container.

PS: I also filed an improvement request to indicate the process and the final result for “refresh schemas” action in the dialog - but at the moment, I can’t provide any estimates when it will be implemented

Currently, the only way to get the detailed error message would be to check the server log in the database command container.

On our cloud instance it would be very hard to catch the required SQL session, but as an option - If it would be acceptable to you - you can create and share with me a separate account which I can use to reproduce the issue on my local test environment.

Hey @igro

I am not using an enterprise instance, I just have the professional plan. When you say create an account, do you mean a new role in the postgres environment?

Yes, postgres role to reproduce the issue.

By the way, could it be the case, that the schemas are huge and it takes significant amount of memory to introspect them?

No, the schema is very small. Let me look into whether I can share access. The database is actually connected through an ssh tunnel, so I may need to get my IT team involved to create a new token and user.

Could you please also check, if you are able to introspect the same database using any other database client (DataGrip)?

Good idea. I just tried that and was able to connect using DataGrip no problem. I see schemas, roles, tables, etc. I just double checked all the settings in DataLore and things look the same, but the introspection error in DataLore hasn’t changed

Thank you for checking this! I’ll ask my colleagues to look into this issue as well, and will get back to you with the updates next week.

On our cloud instance it would be very hard to catch the required SQL session

We might actually try this option – with your cooperation it should be possible to check the logs of the session before the pod is terminated, will ask devops/devs regarding the steps and contact you over DM to sync our efforts.

I’m sorry it taking so long to investigate!

We managed to find the following error for the introspection attempt: SSH tunnel creation failed: Connection refused., not sure why does it happen – could you please check with the IT team connection attempts on your side?

Thanks for investigating. Based on your advice I looked at the /var/auth.log file on the server I’m using. I found the following associated with an attempt to refresh the schema:

sshd[381007]: Accepted password for xxxx from xxxxxxx port 12605 ssh2

sshd[381007]: pam_unix(sshd:session): session opened for user xxxxx(uid=xxxxx) by (uid=x)

systemd-logind[869]: New session 8588 of user xxxxx.

sshd[381064]: error: connect_to localhost port 41529: failed.

sshd[381064]: Received disconnect from xxxxx port 12605:11:

sshd[381064]: Disconnected from user nickmsohn xxxxxx port 12605

sshd[381007]: pam_unix(sshd:session): session closed for user xxxx

systemd-logind[869]: Session 8588 logged out. Waiting for processes to exit.

systemd-logind[869]: Removed session 8588.

Looks like there’s an attempt to attach to an unexpected port. I’m not sure if the specific port numbers here will be meaningful to you, as I think our IT team have some NAT rules in place, but perhaps there is a port that DataLore is trying to access that is not in the normal documentation?

Thank you for checking this up! We reuse DataGrip components under the hood – when you were testing the introspection there, were you also utilizing SSH tunnel, or did you connect directly? If latter, could you please try connecting using SSH tunnel in DataGrip as well?

I used an ssh tunnel with Datagrip as well. The only other difference I can think of is that there would be different IP allowlist rules for when I tried using datagrip vs what has been approved for DataLore’s server. I’ll ask our IT team to get a breakdown of what is used where, but any details you can provide on required ports would be helpful too

I was able to reproduce the same issue on my own database – aprox. 1 of 5 requests fails with the same SSH tunnel creation failed: Connection refused. error and I see the same error: connect_to localhost port 38183: failed. log entries for such attempts in the auth.log.

Will contact DataGrip team and get back to you as soon as I have any updates on this.

I managed to reproduce the issue in DataGrip (IDEA), and there it reproduces each time for me, still not sure what causes it and why it sometimes work and sometimes not.

Filed a new ticket to the DataGrip team: